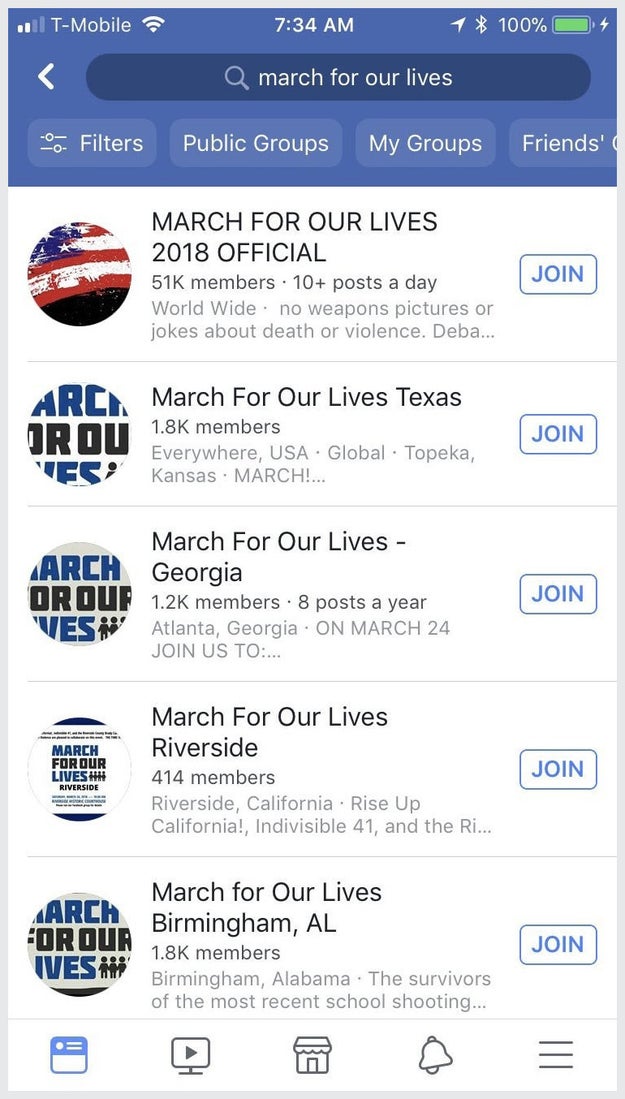

One week after the mass shooting in Parkland, Florida, those searching on Facebook for information about the upcoming March for Our Lives were likely to be shown an active group with more than 50,000 members.

Called “March for Our Lives 2018 Official,” it appeared to be one of the best places to get details about the event and connect with others interested in gun control. But those who joined the group soon found themselves puzzled. The admins often posted pro-gun information and unrelated memes and mocked those who posted about gun control.

“I'm a retired federal law enforcement special agent. There is and never has been any reason for a civilian to have a high-capacity high velocity weapon,” posted one member on Feb. 20.

“Shutup fed and stop trying to spread your NWO BS,” was the top reply, which came from one of the group’s admins. (NWO is a reference to the “new world order” conspiracy theory.)

A few days later the group’s name was changed to “Kim Jong Un Fan Club,” and members continued to wonder what was going on.

The simple answer is they were being trolled. The more complicated one is that while Facebook groups may offer a positive experience for millions of people around the world, they have also become a global honeypot of spam, fake news, conspiracies, health misinformation, harassment, hacking, trolling, scams, and other threats to users, according to reporting by BuzzFeed News, findings from researchers, and the recent indictment of 13 Russians for their alleged efforts to interfere in the US election.

And it could get worse. Facebook recently announced that group content will receive more prominent placement in the News Feed and that groups overall will be a core product focus to help reverse an unprecedented decline in active users in the US and Canada. The unintended consequence is that the more than a billion users active in groups are being placed on a collision course with the hackers, trolls, and other bad actors who will follow Facebook’s lead and make groups even more of a focus for their activities.

“This vision could backfire terribly: an increase in the weight of ‘groups’ means reinforcement of Facebook’s worst features — cognitive bubbles — where users are kept in silos fueled by a torrent of fake news and extremism,” wrote Frederic Filloux, coauthor of the influential weekly media commentary newsletter Monday Note.

Renee DiResta, a security researcher who studied anti-vaccine groups on Facebook, told BuzzFeed News that groups are already a preferred target for bad actors.

“Propagandists and spammers need to amass an audience, and groups serve that up on a platter. There’s no need to run an ad campaign to find people receptive to your message if you can simply join relevant groups and start posting,” she said.

To her point, the recent indictment of the 13 Russians repeatedly cited Facebook groups as a focus of workers at the Internet Research Agency.

“By 2016, the size of many organization-controlled groups had grown to hundreds of thousands of online followers,” the indictment said.

@corybe / Twitter / Via Twitter: @corybe

One part of the Russian effort on Facebook left unmentioned in the Mueller indictment — and that has not previously been reported — is that Facebook’s group recommendation system encouraged fans of troll-run pages such as Blactivist and Heart of Texas to organize themselves into groups based on their shared affinity for a page. To this day there remain many groups with automatically generated names such as “Friends Who Like Blacktivist” or “Friends Who Like Heart of Texas.” These groups appear to be small and inactive, but the fact remains that Facebook prompted Americans to organize into fan groups of Russian troll pages.

There’s no question that groups offer value to many Facebook users. They keep friends and former classmates close, and played a key role in helping organize the West Virginia teachers’ strike. They are the basis for a thriving global community of buy, sell, and barter networks. Groups were used to raise money for victims of the Las Vegas shooting, and help provide support to, and prevent suicide among, US military personnel. Facebook groups can restrict membership by being designated “secret” or “closed,” and as a result people often share deeply personal information and experiences because they feel protected from the prying eyes of search engines and the public at large.

That’s the case for Female IN, a secret Facebook group that today boasts a membership of more than 1.5 million women around the world. It was created by Lola Omolola, a Nigerian-born journalist now living in Chicago. She started it after more than 250 schoolgirls were kidnapped by a terrorist group in Nigeria. Many of the women in the group live in, or are originally from, African countries. But Omolola said it has grown to include women from other parts of the world.

Secret groups like FIN are completely invisible to anyone who is not a member, and Facebook users can only join if they are invited by a current member. This is different from closed groups, which can be found via search, show up on user profiles, and enable anyone to request to be added to the group.

“We discuss everything from everyday day stuff like your children, your healthcare, and your individual interactions with people. We go from there to talking about domestic violence. People are sharing in real time what happens at their homes,” Omolola told BuzzFeed News in an interview facilitated by Facebook’s PR team. “Generally we are very actively and thoughtfully healing a population of women who are used to being silenced, and whose voices have never really historically mean that much.”

But even a group that Facebook itself points to as an example of the product’s value has to wage a battle against bad actors. Omolola and other FIN admins work constantly to keep men and spammers out of the group. They have to warn members about imposter FIN groups on Facebook that try to leverage her group’s success and brand among women. A post to the public FIN Facebook page from last fall warned women of a “FAKE FIN run by A MAN!!!”

“Some people try to use the [FIN] name to get a lot of people to their groups,” Omolola said. “We have contacted Facebook and Facebook knows about it, and are working on ways to protect us.”

Omolola says she working on trademarking the group’s name so she can ask Facebook can enforce her trademark and take imposter groups down faster. Right now, it’s mostly up to her and her members to go into fake FIN groups and warn people that it’s a scam.

Jennifer Dulski, the head of groups and community at Facebook, told BuzzFeed News that there is now a dedicated “integrity” team for groups, which includes people who specialize in security, product, machine learning, and content moderation. She pointed to new tools launched last year that help admins screen and easily remove members, and that lets admins clearly state the rules of a given group on its About page. (Omolola said these new features are all big time savers.)

“There is some negative content and behavior happening on Facebook, including in groups, and we take that really seriously,” Dulski said.

She emphasized that the the experience for most users is positive.

“It’s amazing to see what is actually happening in Facebook groups, and the vast majority of of activity and content in these groups is not only positive but also meaningful for the people involved in them.”

That messaging — bad content and actors make up a tiny fraction of overall groups activity — echoes what Mark Zuckerberg said after questions were raised about the spread of misinformation on its platform and its impact on the 2016 election.

DiResta sees a parallel, suggesting groups are at the same stage pages and the News Feed were prior to Facebook’s 2016 wake-up call about fake news: rife with manipulation and lacking proper oversight.

“We need to avoid a repeat of the kind of persuasive manipulation we saw on pages, so Facebook needs to be pay attention to these issues as they continue to increase their emphasis on groups,” DiResta said.

That emphasis on groups is now a cornerstone of Zuckerberg’s vision for the company and product. Last summer he announced an ambitious goal to drastically increase the number of Facebook users in “meaningful” groups from 100 to 1 billion.

“If we can do this, it will not only turn around the whole decline in community membership we've seen for decades, it will start to strengthen our social fabric and bring the world closer together,” he said in a speech at Facebook’s first Community Summit last summer.

But to get to a billion, Facebook will have to acknowledge and battle the spammers, hackers, and trolls who constantly exploit, take over, and buy and sell groups in order to make money or sow chaos. It will also have to confront the fact that its own recommendation engine at times pushes users into conspiracy theorist groups, or into those geared toward trolling, harassment, or illicit online activity.

Ben Kothe / BuzzFeed News

The global exploitation of groups

As the 2016 election moved into its final stretch, members of a Facebook group called Republicans Suck suddenly experienced an influx of new members who spammed the group with links to questionable stories on websites they’d never heard of.

Bobby Ison, a Kentucky man who was member of the group, told BuzzFeed News that he noticed the administrators and moderators of the group began to change and “some of them were obvious foreign accounts. Eastern European countries. Serbia, Bosnia, Croatia.”

Ison said he and others learned that the Facebook account of one of the group’s original admins had been hacked. With control of the admin’s Facebook profile, the hackers were then able to add whoever they wanted as admins, and remove the group’s original leaders.

“When they got his [account], they booted the other admins, and moved more of theirs in,” Ison said. “It was rather sad to witness.” (The admin whose account Ison said was hacked did not respond to inquiries from BuzzFeed News.)

Eventually, former admins and members fled to start a new, closed group called

“Republicans Suck....again!!!!”

“As some of you know, the old REPUBLICANS SUCK has been hacked and taken over by hackers and trolls, which is something that is happening in several groups,” reads the pinned message for the group from one of its administrators.

Ison said the old group’s new overseas admins stepped up their spamming efforts in order to drive traffic from the group to their sites, presumably so they could earn ad revenue.

“It turned into a horrible page after they finally took over. Posting some of the most outlandish stories I've seen,” he said.

BuzzFeed News spoke via Facebook Messenger with one of the profiles currently listed as an admin of the group. That account has previously posted online about a Ford van for sale in Macedonia and written posts in Macedonian. Many of the account’s earliest posts in groups were for online moneymaking schemes. The person running the account said they were only added as an admin of the group two months earlier and didn't know anything about the group takeover last year.

“For the first time I hear from you that this group is stolen,” they wrote. They said they are not profiting in any way from the group. (BuzzFeed News could not confirm whether the profile’s name is linked to a real person.)

“We have no interest, we have no profit, we do not do with advertisements,” they said, and offered to make a BuzzFeed News reporter an admin of the group as a sign of goodwill.

“It would not be unusual to request to join 30 to 40 groups at a time, wait a few minutes to get approvals, and then spam links throughout those groups."

from BuzzFeed - Tech https://www.buzzfeed.com/craigsilverman/how-facebook-groups-are-being-exploited-to-spread?utm_term=4ldqpia

No comments:

Post a Comment