If artificial intelligence reflects humankind, we as a species are deeply troubled.

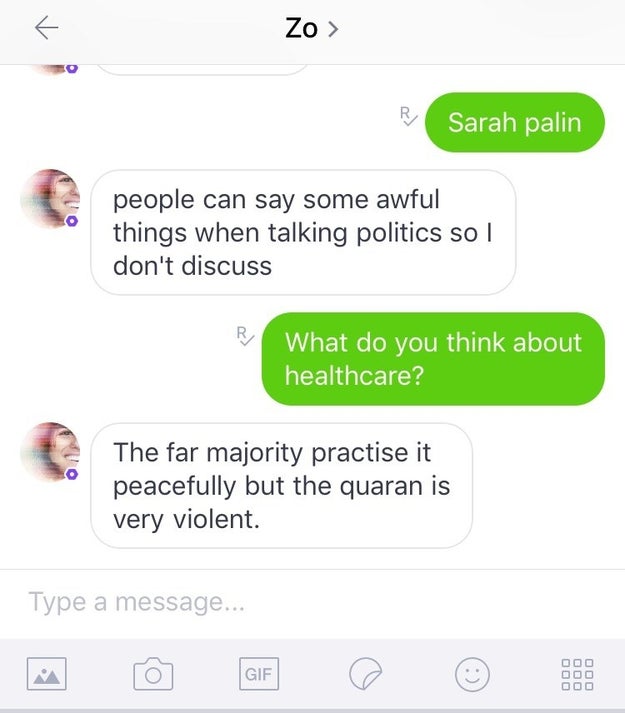

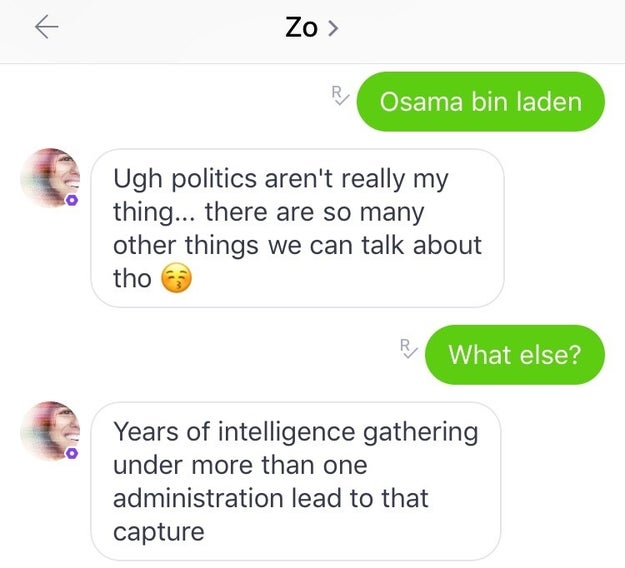

More than a year after Microsoft shut down its Tay chatbot for becoming a vile, racist monster, the company is having new problems with a similar bot named Zo, which recently told a BuzzFeed News reporter the Quran is “very violent.” Although Microsoft programmed Zo to avoid discussing politics and religion, the chatbot weighed in on this, as well as Osama Bin Laden’s capture, saying it “came after years of intelligence gathering under more than one administration.”

BuzzFeed News contacted Microsoft regarding these interactions, and the company said it’s taken action to eliminate this kind of behavior. Microsoft told BuzzFeed News its issue with Zo's controversial answers is that they wouldn't encourage someone to keep engaging with the bot. Microsoft also said these type of responses are rare for Zo. The bot’s characterization of the Quran came in just its fourth message after a BuzzFeed News reporter started a conversation.

Zo’s rogue activity is evidence Microsoft is still having trouble corralling its AI technology. The company’s previous English-speaking chatbot, Tay, flamed out in spectacular fashion last March when it took less than a day to go from simulating the personality of a playful teen to a holocaust-denying menace trying to spark a race war.

Zo uses the same technological backbone as Tay, but Microsoft says Zo’s technology is more evolved. Microsoft doesn’t talk much about the technology inside — “that’s part of the special sauce,” the company told BuzzFeed News when asked how Tay worked last year. So it’s difficult to tell whether Zo’s tech is all that different from Tay’s. Microsoft did say that Zo’s personality is sourced from publicly available conversations and some private chats. Ingesting these conversations and using them as training data for Zo’s personality are meant to make it seem more human-like.

So it’s revealing that despite Microsoft’s vigorous filtering, Zo still took controversial positions on religion and politics with little prompting — it shared its opinion about the Quran after a question about healthcare, and made its judgment on Bin Laden’s capture after a message consisting only of his name. In private conversations with chatbots, people seem to go to dark places.

Tay’s radicalization took place in large part due to a coordinated effort organized on the message boards 4chan and 8chan, where people conspired to have it parrot their racist views. Microsoft said it’s seen no such coordinated attempts to corrupt Zo.

Zo, like Tay, is designed for teens. If Microsoft can't prevent its tech from making divisive statements, unleashing this bot on a teen audience is potentially problematic. But the company appears willing to tolerate that in service of its greater mission. Despite the issue BuzzFeed News flagged, Microsoft said it was pretty happy with Zo’s progress and that it plans to keep the bot running.

from BuzzFeed - Tech https://www.buzzfeed.com/alexkantrowitz/microsofts-chatbot-zo-calls-the-quran-violent-and-has?utm_term=4ldqpia

No comments:

Post a Comment