When you hear about it from company insiders, Facebook’s system to protect its Trending column from bias sounds pretty bulletproof. It put a bevy of safeguards in place to prevent bias, or the perception of it, from creeping into the column — a short, rolling list of news events and topics that people are talking about on Facebook. The company presented this as a machine-based system, where human editors had little influence. But in fact that wasn't the case at all; it was a flawed system that created opportunities for human bias to appear — and the disclosure of those opportunities perhaps hurt the company as much as any actions its curators may have taken.

It may have benefitted Facebook to disclose the review process it has in place, one that's used to strip out any hint of political leanings from its Trending column, according to two former Trending curators. The company monitors its curators’ work closely, tracking their decisions, reviewing their story selection and headline suggestions, and sometimes re-reviewing those decisions again after a story is published to the column. Facebook even subjects the Trending team’s decisions to public criticism from the larger employee base, in an attempt to bulletproof its operation.

And yet last week, Facebook found itself in precisely the position it strove to avoid. A former curator came forward — anonymously, thanks in part to Facebook’s own policies — and publicly accused the company of suppressing conservative viewpoints and news in the Trending column. The resulting firestorm exposed an ugly truth: Facebook never properly explained its policies for curating what is increasingly a very powerful news broadcast platform, and it’s still struggling to do so now.

There are processes and protocols in place for the curation of Trending. There are layers of oversight and instructions for the human element and, beneath that, an algorithm tracking topics and news events of global interest to Facebook’s audience. But there’s enough leeway in the Trending process that positioning it as something purely mechanical was a mistake.

When allegations surfaced that some Facebook Trending curators had suppressed conservative viewpoints in the column — poking holes in Facebook's public explanations of the column's inner workings in the process — what should have been a small blip turned into a full-blown controversy that’s now set off alarms as far as the U.S. Senate.

Trust the Process

The Trending team's mission is to separate the big news of the day from the wider world of what's bubbling on Facebook. “Anything can be trending online but that doesn’t mean it’s news. Our job was to find the news rather than find what’s going on on the internet,” said one former Facebook Trending curator, who spoke at length with BuzzFeed News (and asked to remain anonymous due to an NDA).

"Our job was to find the news rather than find what’s going on on the internet."

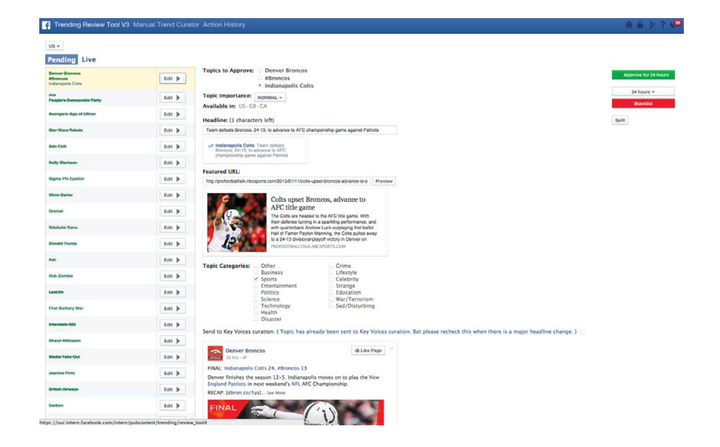

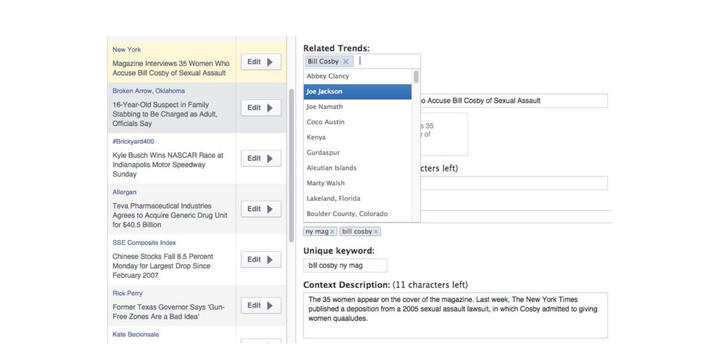

Another former curator spoke of an intense process for eliminating bias in Trending in an interview with BuzzFeed News. The Trending team, this former curator explained, works through a list of potential trending topics spit out by Facebook’s algorithm. When a topic is deemed cohesive (it must be newsworthy, not something like “Indian food,” a recurring rejected suggestion), the curators select it, write a headline and description, and pass it along to editors who further proof it for typos and bias. Everything the team does is logged, and if someone tries to insert their own opinions, that can be traced back. The curators are explicitly told to steer clear of bias.

“There’s a trail of breadcrumbs for everything you do,” the former curator said.

On top of all this, there is an internal Facebook group called the “Trending feedback group” where anyone at the company, from sales to engineering, can register their complaints about Trending. By way of example, the former curator said one employee would regularly jump in the group after mass shootings and urge the Trending team to not cover them, citing research that news coverage was a motivating factor for these shooters. The team denied this employee’s requests.

Facebook needs these safeguards to protect the system from unusual behavior on the part of the curators. The curators are able to downrank stories appearing within trend pages, so a liberal editor could try to downrank conservative publications within a trend and vice versa. There’s also the matter of which stories the curators pick to include in the column. If someone with strong political leanings needed to pick a story and saw one not in line with their political beliefs, they might go ahead and pick another option. These scenarios, all offered by the former curators, are unlikely but they are possible.

This confirms what others have reported: The Trending section is often steered by human judgment, contrary to the narrative Facebook long put forth. In past interviews company representatives described an algorithmic system largely free of human intervention. As recently as December 2015, Facebook said an algorithm chose the stories featured in Trending, not human curators.

If algorithms made all the decisions, then individual curators’ personal biases couldn't come into play. But it wasn't just an algorithm deciding what appeared in Trending. Human beings were also in the mix, which means their personal beliefs could influence which trending stories to select, how those stories were described, and which media sources those stories linked out to. The bias accusations became far more explosive when it was revealed that curators could use an injection tool to insert topics into the trending bar that are not picked by the algorithm.

A Dose of Reality

The outcry aver the bias allegations seemingly caught Facebook off guard. The company’s CEO, Mark Zuckerberg, took four days to respond. And Facebook still has not given a straight answer on whether it instructs curators to inject trends. But, company insiders explain, when you understand the cautious attitude the company takes internally in regards to political bias, that flat-footed response isn’t surprising.

Inside Facebook, the very idea of bias creeping into the system is taboo. “They think that any sort of oversight or thoughtfulness or discussion about it is unwarranted and unnecessary because of course they would never do it,” said a person with knowledge of Facebook’s thinking, on the question of Facebook swinging an election. "It isn’t a great system of checks and balances.”

Both the former curators BuzzFeed News spoke with said they think Facebook’s intentions are pure. And both said they saw nothing untoward. But those pure intentions may have in fact hurt the company in the end, because they kept it from seeing potential flaws that a bad actor could introduce. And if that's true in Trending, it becomes natural to ask the question as to whether it is true of other parts of the platform as well. Could a rogue team of engineers, for example, swing a local election via a News Feed "test"? Better informing the public that uses its products and exposing its system to public scrutiny could help isolate the company from the level of outrage it experienced in recent weeks.

As one former curator put it: “I don’t think any of us expected for this to become a story.”

from BuzzFeed - Tech https://www.buzzfeed.com/alexkantrowitz/failure-to-accurately-represent-trending-caused-facebook-unn?utm_term=4ldqpia

No comments:

Post a Comment